Tuning PHP FPM on nginx

As the Store Locator Plus® SaaS platform continues to grow we are finding it more important than ever to fine tune our web server and PHP processes to better serve the larger data and network demands. A recent review of performance showed process timeouts happening during large data imports and side-loading; especially when the read and write endpoints hit the same server node. Here are some things we did to improve performance.

Get off faux sockets

PHP FPM is typically installed with file-based sockets. While this lessens traffic on the network hardware, most modern servers are equipped with fiber-ready network connections. These network ports and the TCP stack that interfaces with them can often handle a higher peak load of I/O requests than the file system can manage via the “pretend” sockets run through the operating system file I/O requests.

If your server is running on solid state drives there may be less disparity between the I/O rate of a network stack and file stack. The servers we run use high-end SSD drives and high speed network cards — yet we still found we could push through nearly twice as many requests to PHP-FPM from nginx when switching to TCP sockets.

Configure PHP FPM for TCP sockets

You’ll need to configure your PHP FPM to listen on 127.0.0.1:9000 — the network loopback address port 9000. It is a good idea to set the only client to be yourself as well.

;listen = /run/php/php7.0-fpm.sock

listen = 127.0.0.1:9000

listen.allowed_clients = 127.0.0.1Change nginx fastcgi params

Now go tell nginx to talk to PHP-FPM on the new sockets. You’ll need to do this for every site, snippet, or other included config file that directs PHP traffic.

location ~ \.php$ {

include snippets/fastcgi-php.conf;

# default: faux sockets via unix file

#fastcgi_pass unix:/run/php/php7.0-fpm.sock;

# modified: actual TCP socket

fastcgi_pass 127.0.0.1:9000;

}This article on server for hackers will give you additional insight

Look At PHP FPM Memory Consumption

Use a command like htop or ps to review memory consumption. For HTOP the values include VIRT – the total memory available to a process, RES – the resident memory or memory in use by the process, and SHR – the amount of memory that may be coming from shared processes.

htop

You typically want to look at RES as the amount of memory each process uses. Average it out. BUT you must know how your PHP and web process libraries are configured as well as the shared memory stack on your server. Most libraries in PHP are shared. That means a bulk of the code, which can be as much as 80% of the shared library memory, is only loaded ONCE no matter how many PHP processes you activate.

In our setup we average 139M on resident memory and 123M of shared memory. That makes sense as most of our web processes are not only using the same exact PHP libraries but also a huge chunk of identical PHP code. A data stack of 16M per PHP process is reasonable.

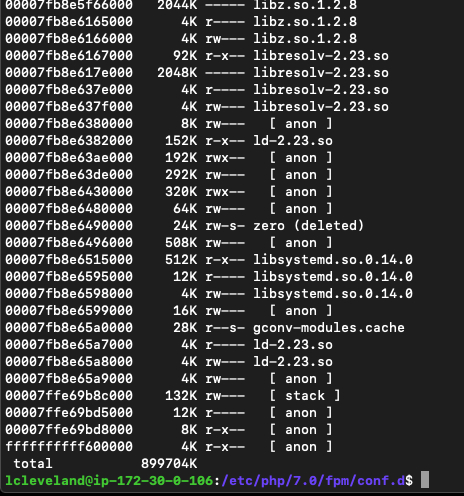

pmap

You can validate this by using the pmap command with the process id:

# sudo pmap 2963

This returns the entire memory map of process #2963

In the example below 899704K of total memory is in use but a large chunk is system libs, PHP libs, and web server lib shared code.

Having the visibility that pmap provides may indicate libraries or features you may have enabled and can disable. We found a production server with xdebug libraries still active. Turning that off cut nearly 50M or resident memory from every process!

free -m

Looking at the system memory with free-m gives you a high level overview of total system memory usage. It will report the total memory, used at the moment, free, shared memory, and buffers.

You will want to track buffers and memory used by non-PHP processes if you run other apps on your server such as the database. Our web servers are only serving web apps, so there is almost no additional overhead. That means I cna use the buffer value in calculating how many PHP processes to spin up.

Calculate PHP Max Processes

The simple version of the formula:

total system ram - used by apps ram - buffer ram / ram use per PHP app

16GB - 1GB (various system processes) - 500M (cache process) - 500M (php) = 14GB for PHP apps / 16M = about 800 max processesIn our example server from the cluster , a web-app specific node, there is 16GB available of which 1GB is consumed by various server processes, 500MB of high speed local cache, and another 500M used by PHP and related shared code. That leaves 14G for PHP apps which are averaging 16M/process. Each node can handle around 800 simultaneous web requests or PHP-FPM children

PHP FPM Pool Parameters

Tuning the PHP configuration file is also important. Here we are referencing PHP 7.4 and the FPM service on Ubuntu. The configuration file we are interested in lives at /etc/php/7.4/fpm/poold/www.conf

pm.max_children

This should be set based on total available memory, per the calculations above. For our server we are now running on a server with 8GiB RAM , 1 GiB of which is allocated to system overhead and the main PHP engine itself. That leaves 7GiB for PHP application RAM. Our app now consumes 300MB per process.

Total RAM – System Overhead = 7G / 300M = 23.3 children max, so we’ll set that at 20.

pm.start_servers

Some online resources recommend this to be CPU cores x 4, however we found we could not set it to more than the # of CPU cores.

pm.min_spare_servers

Some online resources recommend this to be CPU cores x 2, however we found we could not set it to more than the # of CPU cores.

pm.max_spare_servers

Some online resources recommend this to be CPU cores x 2, we left it at that.

Reindexing in the database

Keep in mind that your PHP processes do not run in a vacuum. Most PHP apps running on web servers are going to talk to a database. If you spin up 800 PHP connections it will very likely need 800 database connections even when using persistent connections. Each web session will demand its own channel for most apps.

Make sure your database server is configured to handle all those connections. We run on Amazon Aurora database clusters which vary greatly in how many connections they can handle. Make sure the max_connections on the database engine match how many you may throw at it.

Great insights! 👍