Current State Of AI As A Junior Programmer

I’ve been playing with artificial intelligence as a coding assistant for the past 30 months. The first attempts to employ AI to speed up code maintenance and development was a disaster. It was often providing incorrect code examples. It would often mangle existing code so badly it was faster to revert the code than try to untangle the huge mess of algorithmic mayhem that was left behind. During those first months I would often discard AI as anything but a general research tool in place of Google search, but stayed far away from employing it in daily coding activities.

About a year ago that started to change. I once again tried various AI agents from Open AI, Grok, Anthropic, and others. It didn’t take long to realize that the latest iterations of the AI bots were much improved over the prior generations. The two favorites at the time, Claude from Anthropic and ChatGPT from OpenAI were both ok at writing code. Not great. But not completely screwing up existing algorithms and leaving a mess of chaos and destruction in their wake. Hell, they even would occasionally patch code correctly and provide repo-commitable code on the first go. Sometimes. If the tasks were simple enough.

I started employing AI more often. My coding environments, primarily JetBrains IDEs like WebStorm and phpStorm, had decent AI agent interfaces built in. These agents would be able to “see” the active project and in progress code from within the IDE, given them important context clues about the overall WordPress plugins and SaaS platform algorithms that make up the Store Locator Plus® platform. AI was just feasible enough as a coding agent to be a mostly break-even if not very minor time saver while working on my coding projects.

Don’t get me wrong, it wasn’t great. It was just usable enough to stick with it. It was clear based on the progress these AI agents were making every few months that these tools would be required in any role involving software architecture, coding, and project management. No longer a black hole of lost time, it was just tolerable enough to keep working at it and trying to make it more efficient.

Fast forward to today. Things are a lot different, but still not great.

The Artificial General Intelligence Argument

Over the past month there have been notable upgrades in the processing models from all the major AI players. Anthropic and OpenAI are battling for supremacy in the software development space. Grok and Google are making constant improvements on general knowledge and task processing with Artificial Intelligence. Along the way I’ve heard multiple rumblings about artificial general intelligence (AGI).

For many in the field, AGI is considered the holy grail of artificial intelligence technology. The common refrain is that the first company or companies to achieve true AGI will “win the battle for AI supremacy”. However, if you delve a bit deeper into this topic you will soon learn that nobody can agree on the rules, the tests if you will, that artificial intelligence needs to pass to be given the official “AGI” certification. Turns out AGI is just a concept with no hard rules about what AGI actually is. Years ago it was though the “Turing Test” was the main criteria; In general terms “can a typical human that interacts with a chat application determine whether they are chatting with a human or a machine (AI)”. Well, turns out many consider that goal to have been met months ago. It also turns out that many also think that today’s AI is still not qualified to be called AGI.

Turns out, I think they are right.

I have also heard more and more people that are self-proclaimed “AI Experts” saying that we have reached AGI in specific verticals. Claude Code as a coding or task management agent, for example is one example they provide. The claim is that the AI agent is given a generic task, works toward the goal, evaluates their own output at each step along the way, adjusts the future tasks according to their own self-analysis, and eventually reach a gaol with little or no human involvement. The fact that AI achieved a goal after having to “pivot”, possibly more than once, is good enough to earn the AGI moniker.

But is it?

I don’t think that is enough to qualify. I have seen examples everywhere that indicates we are still a long way from true general intelligence. Just try taking a Tesla FSD with all the latest tech involved and you’ll quickly learn that those all-too-common real-world “corner cases” throw some of the most advanced AI systems in he world for a loop. If a new Tesla Model S running the latest full self-driving (FSD) software plots a route that happens to bring it to a one-way-street, or a closed intersection, it more than often ends up in a dead-end situation. The FSD quickly freaks out , gives up, and requires human intervention. Most capable humans of even questionable intelligence often figure out the proper solution in a matter of seconds. AI often gets stuck in a hyper-analysis loop that consumes so many resources it aborts the mission.

AI In A Junior Programmer Role

While I don’t know the ins-and-outs of advanced AI systems like the Tesla FSD software, I do know software architecture and development at a highly advanced level. As such I can easily ascertain the functional levels of an AI system and properly evaluate the results of the AI output when performing tasks that would normally be assigned to a junior programmer. I’ve had dozens of programmers working for me either as direct employees or as contractors for a wide variety of software projects over the years. As such I have a solid baseline of what the capabilities and expectations are of various programmer skill levels from interns to junior programmers all the way up to senior full stack developers.

As of January 2026 even the best AI systems with extensive context hints and augmented data retrieval systems are generally the equivalent of entry-level programmers with some hints of junior programmer capabilities. If given a blank canvas they can come up with some mostly functional application code as long as the application is sufficiently minimalistic. AI is great at mimicking well defined UX patterns. The applications look good on the surface. They are even mostly functional. Just don’t look at the implement too closely.

If you do look closely you’ll quickly realize the inefficient tangle of code and backend processes that are hidden beneath the surface. AI is certainly not capable of creating performant code these days. I partly blame that on the insane amount of poorly written code humans have generated, mostly from off shore contract houses filled with coders with barely high-school level coding skills. Sadly AI is trained on far too much low quality garbage coding. As an insanely complex pattern engine more than a true intelligent being made up of a silicon model of the human brain, it repeats that low quality design and implementation.

AI Failing A Cursory “Level 2” Analysis

My first example that highlights the lack of intelligent processing is the lack of understanding of what I call the “implied deeper analysis” of a problematic algorithm. In this example I gave both ChatGPT Codex 5.2 and Claude Code v2.1.7 the same task. Both failed in similar ways. Both have had extensive context training on the application at hand, but in this case application and code design knowledge is not that important. The general rules of problem resolution do not require intimate application knowledge. The only thing that is required is a basic understanding of application processing, real time analysis, and code stack processing.

In simplest terms, there was a bug in a piece of code where a variable was being set to null (nothingness, a non-value). A section of code was asked to iterate on that variable looping through all the values , a basic PHP foreach loop. For this to work the variable needs to hold a list of things, an array. A simple review of this well-documented piece of code would tell any coder that the variable was to be an array (list of things). Generic code-inspector tools from more than a decade ago could easily figure this out. So could the AI agents.

However, my code was failing. As I explained to the AI… “when this piece of code starts the variable indeed is an array, the first few steps in this function change that variable from being an array to being null (nothing), which causes the foreach to fail”. The AI can read all the code. It can see all the code hints and documentation. It has access to real-time processing and debugging tools. Same as any hired human programmer would have.

I explain the issue in detail to the AI and ask for a fix. Within 10 minutes or so I had a fix. The code ran. No failure.

Here is what the AI did…

function xyz ($list: array) {

.. do some steps

$list = do_this_to_the_array();

// AI Agent fix

if (is_null($list)) {

$list = array();

}

// Existing code that would break

foreach ($list as $item) {

... do things to each thing on the list

}

That quick little fix, that my AI agents were so proud of, worked. It stopped the application from crashing outright. However I asked the AI agents to figure out why the list stated as a list of things, was being set to null within this function, and come up with a fix so the list would not be empty. I said “this list is being set to null but it should be an array” as part of my longer description.

Turns out that was a mistake and allowed the AI agent to be lazy and choose the “fuck it good enough” path. While I’ve hired plenty of contract coders “on the cheap” that would do this kind of thing on a regular basis, it is also the type of contractor I would fire after one warning.

On the other hand, any programmer I would have hired to my contract coding company would NEVER have come back with such a patch. In fact, this type of patch is dangerous. It MASKS the problem without solving it. The underlying problem is still there but now much harder to track down.

A halfway-decent junior programmer would have taken my initial input and done a lot more with it than stop the error. They would have run the application, found that $list started out with an actual list of things but when it got to the foreach process would be null. Within minutes they would have realized the “do_this_to_the_array()” function was doing something to that $list variable and setting it to null.

The AI agent, both of them, on the other hand, were like “fuck it… this is being set to null and it should be an array… let’s just force it to be an array”. NEITHER one, despite hints to do so, even looked at the do_this_to_the_array() function for further inspection. Any human programmer would have looked into that function and realized it was munging the $list. It would also be very obvious what was wrong with a simple visual inspection, no run-time analysis needed. The underlying do_this_to_the_array() function was returning null instead of the modified list.

A super easy fix.

However, if I just employed the AI agent to do this task, re-ran the application looking to see if that error went away, I’d have gone on thinking “this AI is great, look how smart it is”. Only to find out months later when customers complained the application was not working properly, that the AI masked the problem instead of solving it.

In my opinion a truly intelligent operator would not simply complete a task at hand. It would analyze the situation and the context in its entirety. It would infer additional knowledge from the prompt that was entered asking for a fix. Where is the failure in both of these systems? Who is to say.

The bottom line is that the AI agents did not provide a complete solution. To add more prompts to guide it to a compete solution is counterproductive. It was taking longer to write prompts and correct the AI actions than to find and fix the code myself.

AI Handling A Mid-Size Refactoring

In my second example the results were a bit more promising. This is a far more advanced task that I would typically hand off to a solid junior programmer. In this case I was pleasantly surprised by the initial results. The pleasant surprise was short-lived when I looked “under the covers” but what the AI agent came up with was still fairly impressive. Did it save me time? Probably. How much is yet to be determined.

The task at hand? Convert an existing PHP model into a Typescript React component. This is a bigger task than some people may imagine. Not only are we changing the coding language, but also the implementation methodology. While PHP and JavaScript (or TypeScript) are similar in many ways as far as syntactical constructs, it is not a one-for-one translation. In addition a React style application uses a complex front-end implementation to handle drawing the web pages while sending requests to a server (the backend) to get data that is later filled in on the page being drawn. Most modern web applications use this type of architecture, maybe not React but employing the same principles. PHP coding on the other hand, fetches the data directly on the server ahead of time then draws the web page using that data. The architecture is quite different.

Thankfully for my AI agent, in this case Junie (which is a modified code-specific implementation of Claude Code as far as I can tell), I had plenty of examples of how to do this refactoring. I already had several PHP libraries that I had converted to React components over the past few months. I pre-loaded these examples into the AI agent to provide a solid baseline for context. The task was far more complex – “Take this existing PHP library and create the equivalent in TypeScript using the same React components I showed you earlier. Use the modern React interface design, but keep the underlying code logic and architecture from the PHP application intact.”

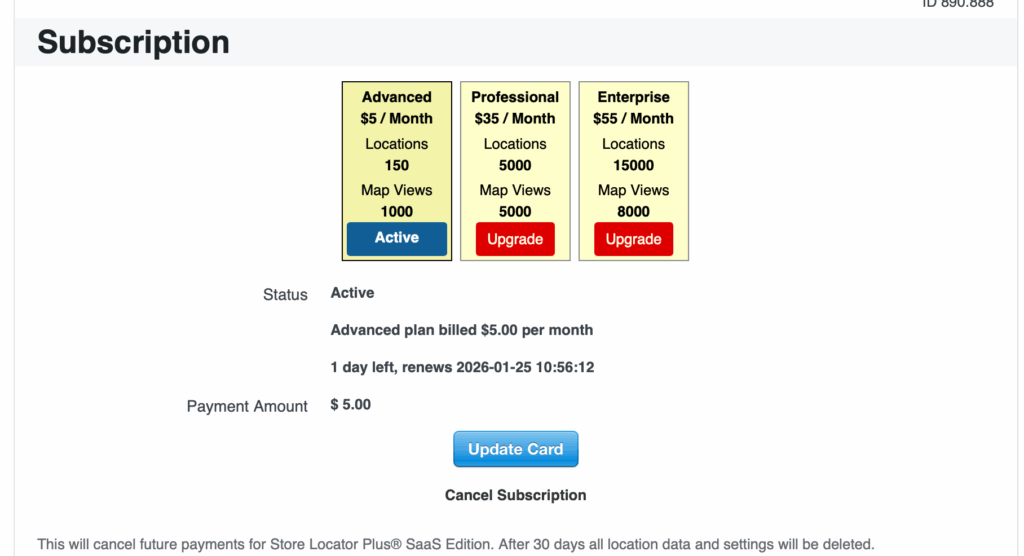

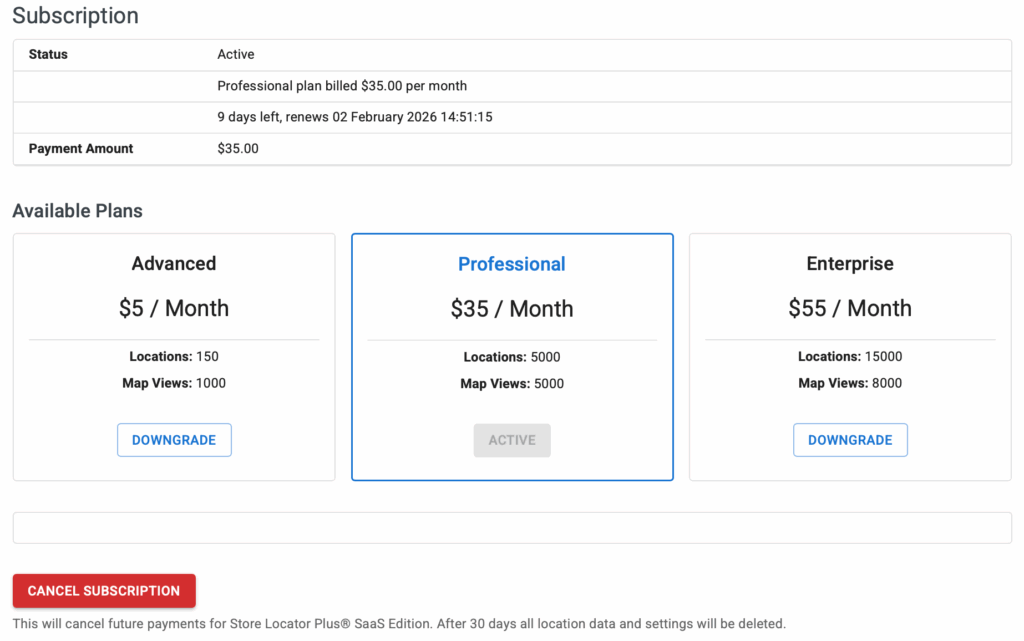

The Junie AI agent took a while with this task, consuming about 20 minutes of processing time to release the initial results. Not a very long time considering what was asked. A junior programmer would have taken most of a day, of course with the requisite water cooler breaks and what-not. The initial results that the AI agent came up with were impressive. The initial TypeScript compiled with no errors. The page rendered and was mostly correct. Since it was using the React components and Material UI framework I employed elsewhere, the UX was mostly where I wanted it. Mostly.

Of course there were some obvious issues with the UI. For one, the blank table above cancel subscription. Why draw that if the content is empty? That is a beginner programmer mistake. Also, plans are either “Active” or “Downgrade” where Enterprise used to clearly be and “Upgrade” button. Minor issues, but one a junior programmer would have caught.

That said, it was impressive that the AI agent rewrote a decent-sized PHP module to provide a small PHP pre-loader for the JavaScript to utilize as a boot strap for the UX elements. It also created the right PHP framework needed to load a mini React applet inside a PHP application inside a WordPress multisite installation. Granted, it was able to copy the architecture I had created previously, but as a great pattern engine replicator, it did a fairly on-point job of copying the architecture over to the new React-based configuration.

However, like the prior example, digging a little deeper uncovered a myriad of problems.

The Deeper Issues

Looking deeper at the resulting application made me realize that while proficient in some things, the AI agents are still not well trained coding agents. They veer far from the path of a properly trained junior programmer. This becomes very obvious when looking at the code that was created and makes it very clear that while the code may run, it is not going to be efficient and often has hidden real-world problems embedded within.

Looking deeper into the code there are a plethora of issues. Some are relatively significant. Others are more of a “why did you do this same exact thing TWICE” proficiency problems.

The “It Is Always Stripe” Issue

For example, this piece of code is supposed to do something when someone has paid using the Stripe payment service. AI decide that both Stripe and PayPal were the same thing, but just on this one somewhat important line of code. The rest of the methods it created treats Stripe and PayPal as distinct entities. This begs the question, why , while working in the same context on the same tasks with the same context memory stack intact did it determine “hey, let’s check if we are using stripe, but also PayPal just to be safe”? Yes, I checked the original source and cannot find anything in the prior code it based this work on as doing anything similar.

The AI is saying “if the person is using Stripe OR they are using PayPal”… do the Stripe stuff. WTF.

// Stripe specific

$stripe_data = [];

if ( $usingStripe || $this->myslp->User->payment_processor === 'paypal' ) {These types of issues come up consistently.

If I only accepted the code that was created and instead focused on the visual presentation I would easily fool myself into thinking “hey, this AI did a great job with little effort on my end”. I could very easily have told the AI, “hey, this is how you label the buttons upgrade or downgrade” and “remove that empty table outline if the content is empty.

It would have fixed those issues. On the surface this would look great.

Later my tests for PayPal users would do really weird shit. Not break, but it would not work right. When they try to upgrade, downgrade, or cancel the subscription they’d have been directed to Stripe. Stripe would put up an interface saying “how the hell are you?”. The user would not be happy. Customer Service would be getting angry emails.

That is not the results we want from AI.

The “Status Is Always Active” Issue

This is a piece of code, verbatim, that the AI agent came up with. This shows a complete lack of “intelligence” or understanding of code logic as every single time this runs $theStatus variable is set to ‘Active’ , rendering the prior if test useless.

if ( $this->myslp->User->subscription_is_cancelled ) {

$theStatus = __( 'Ending', 'myslp' );

}

$theStatus = __( 'Active', 'myslp' );Not only is the above code inefficient, it turns out it is functionally incorrect.

As an AI test I did ask AI if it saw anything wrong with $theStatus in this function. It found the issue and fixed it after a too-long analysis. However, if I need to go back and review the work, point out the error, ask AI to fix it, check, those changes, don’t have errors then this becomes less of a productivity tool and more of a chore. To fix this one issue took the AI more than 5 minutes to resolve the problem when a human operator, even an entry level programmer would have come up with this particular fix in less than 30 seconds:

if ( $this->myslp->User->subscription_is_cancelled ) {

$theStatus = __( 'Ending', 'myslp' );

} else {

$theStatus = __( 'Active', 'myslp' );

}AI Needs Expertise

These are only a couple of examples within the code that was created.

These are the types of issues that come up when you only look at visual results in an application. This is why I’d never trust anyone that is a “vibe coder” as producing anything useful in real world situations. If you don’t know what you are doing or are lazy enough to just let AI iterate over past work until it “looks right” without looking closely at the underlying results after each interact you are likely to build a house of cards. Eventually all those hours spent “vibe coding” is going to come crashing down in flames.

Also, it makes me question “what was AI thinking”? IN reality it isn’t really thinking as much as iterating over patterns. Looking closer at the code and repeated patterns like this make it super obvious it copied a code pattern then simply inserted it whenever it needed the same answer. It can’t even understand the code at a deep enough level to realize “hey I set this 3 lines earlier, I don’t need to do it again”. Something a junior programmer would never do.

Where Is AI Today?

AI is certainly progressing. Every time there are new versions released it becomes far more capable. AI is as the point where less precise work such as writing text, creating images for entertainment value, or even doing competitive research and comparative analysis AI is very proficient. AI can now find, filter, sort, and come up with results by feeding is simple text prompts. People no longer need to write simple code, create spreadsheets, or even learn how to use basic editing tools to get results.

However, for disciplines where precision and accuracy matter it still has a long way to go to achieve what I would consider “proficiency”. Yes, when it comes to coding it can perform simple tasks fairly well. It can mimic well-defined architecture patterns and apply them to generic written-word rules we provide as prompts. The results can be decent if the prompts are well crafted and matched with a well-defined context engine that steers it toward solid results.

However, when it comes to create well-crafted proficient coding or doing a deep analysis to solve complex application processing issues – it still has a ways to go. I am sure someday it will get there, but in my opinion it is still quite a ways from being a reliable proficient junior programmer. It is even further away from being able to create a well-crafted fully functional application from a simple design specification, something most decent full stack senior developers can do without too much effort.

For now, I continue my AI mantra I share with anyone that asks — “AI is a productivity multiplier that yields the best results when employed by an expert in the field. Without the human expertise to guide the process and evaluate the results it is equally as proficient as a problem multiplier.”