Using AI With JetBrains PhpStorm

I’ve been playing with the various iterations of AI coding assistants provided by JetBrains over the past few years. Initially the AI assistance was complete garbage. The code generated was often wrong or incomplete. When it was mostly right it had hidden issues that caused performance problems or introduced fragility to the applications it generated. In general AI coding assistance was awful. Inexperienced coders went into “Vibe Coder” mode and started producing lots of garbage, often ending in failed full production deployments.

About a year ago, things changed. I noticed the latest models from OpenAI were generating far better code. This started around the time the 4o model was released. Finally the AI assistant was actually helping refactor code and fix bugs more often than it was creating more issues. It was still far from perfect, often taking longer to tell the AI what it did wrong or where to get more information than it would have taken to do things without employing AI assistants at all. However it did sometimes come up with new methods that improved on my own work. I started using it but making sure everything was reviewed and managed , much like having a new intern helping “fix” code.

As AI continues to progress, the latest iterations of code-centric models like Claude Coder and Deepseek Coder agents are producing far better results. Far less errors, far more insight and improvements. It still does some wildly crazy shit from time-to-time, but it has gotten better. It is actually mostly useful at least half the time. Overall it is not a productivity enhancement versus a black hole of prompt hacking that sucks up a week of time to fix one line of code.

I realized over the past few months that AI, if properly managed an employed, is going to be a game changer for multiple job functions including acting as a junior software engineer. My goal, however, is to learn as much as I can about AI and see if I can create a junior or even senior software architect and engineer all rolled into one.

I decide to use the NVIDIA DGX Spark to help me with the journey along with many hours of prompt hacking on a variety of AI services including ChatGPT, Grok, Claude, Perplexity, and others. I am also learning how the differences in the inference engines impact the quality of work as well as how the models perform; A model that creates perfect elegant code but takes 30 minutes to process a single requests is nearly as useless for boosting productivity as an engine the produces viable but crappy code in 10 seconds. Finding the right balance is critical.

JetBrains Is Falling Behind

One thing that has become readily apparent over the past few months of working with AI on my Store Locator Plus® project; JetBrains is falling behind as an AI enabled IDE. Yes, they’ve had the AI Assistant available for well over a year now. However this is an add on provided via a plugin and not completely integrated into the IDE. From what I’ve seen with some other IDEs like Cursor, AI is more central to the coding process. Even VS Code is often cited as a better alternative for AI assisted coding.

While the JetBrains AI Assistant is sufficient for rudimentary AI assisted tasks, it fails at complex requests. Flaws in the AI Assistant inference engine often has the AI Assistant building an insanely complex, but unnecessary, context to send to the models for processing. This design means that your tokens, which are now fairly costly, get used up very quickly. In fact a simple request the other day to create a basic instruction set for the AI Assistant ate up 75% of my tokens in one request. Ensuring the AI agent provides proper context versus a “throw everything at the wall and see what sticks” is critical to efficient AI processing. Large context envelopes with most irrelevant content eats up network bandwidth and forces the AI servers to burn enough energy to power a small country.

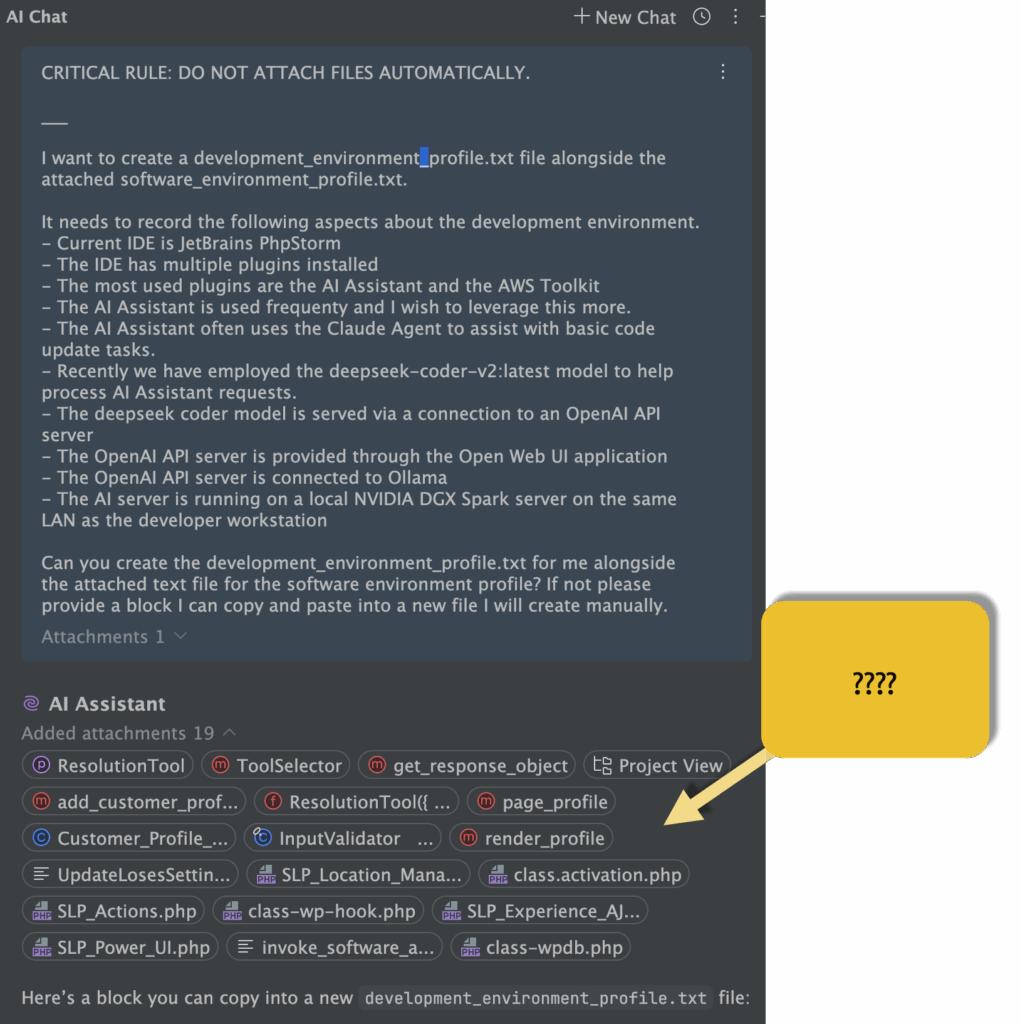

This issue with the AI Assistant is readily apparent in the latest builds. While trying to generate a basic “this is my development environment” profile that any LLM can process, the JetBrains AI Assistant routinely decides to attach at least a dozen, if not two dozen, random files from my project. This includes random configuration files related to Docker, some PHP code files, some JavaScript files, the internal IDE management files and more.

This happens whether trying to talk to the NVIDIA DGX Spark on the LAN via the OpenAI API or via their built-in GPT agents. Sadly, when running through their GPT agents like Claude or ChatGPT 5, this type of errant context loading chews up AI credits in a hurry.

Continue Plugin vs. AI Assistant

Before loading Cursor or VS Code and learning a new IDE — which I really don’t want to do after learning all the JetBrains tricks over the past 15 years, I decided to give the Continue plugin another try. The Continue plugin works with the Continue.dev AI assistant.

While I had issues with the plugin on the first run thanks to some random Java null errors, a full reboot of the system after installing the Continue plugin seems to have resolved that issue. With the Continue plugin running in PhpStorm it acts much like the built-in AI Assistant. Personally I feel the UX is a bit less refined, but given the issues noted above in the AI Assistant from Jetbrains, I’d rather have functionality over style. That’s always been my modus operandi as anyone familiar with my less-than-stellar UX work with Store Locator Plus® will note.

To get the Continue AI agent working with the DGX Spark running the OpenAI API I needed to create a local configuration file. Thankfully that was fairly easy, and the AI agent immediately connected and provided me with a connection to my deepseek-coder-v2 model.

name: Config

version: 1.0.0

schema: v1

assistants:

- name: default

model: OllamaSpark

models:

- name: OllamaSpark

provider: ollama

model: deepseek-coder-v2:latest

apiBase: http://192.168.0.198:11434

title:

roles:

- chat

- edit

- autocompleteThankfully the Continue agent only includes specifically referenced files in the context. Sadly the UX is not as intuitive and it is much harder to share a larger context or set of files than JetBrains AI Assistant. While this is good for reducing token consumption, it is looking more like an “all or almost nothing” approach.

Summary

While I am now able to work with two different agents and with multiple models and inference engines, none of the tools I have available at the moment are a perfect fit. I often find myself going back to my local ChatGPT application and using the built-in MacOS bridge so that it can communicate with PhpStorm directly. The crazy part is the ChatGPT inference engine is getting open files and other context data from PhpStorm via this local bridge and it NEVER includes dozens of files like the built-in PhpStorm AI Assistant. Sometimes PhpStorm does try to feed ChatGPT a random context element like the content of an open AI Assistant chat, but that is quickly remedied by turning off the AI Assistant before employing the separate ChatGPT reference.

Sadly, none of these solutions are great for long term training on the code or system architecture. While ChatGPT and Claude do have projects with their own long-term context storage (memory), those are still not great solutions. At the very least that cross-session memory is trapped in the AI service; You have a project in ChatGPT, good luck getting those “knowledge tokens” out of there so you can transfer all that project training other than another AI agent.

AI Assisted coding is still very early in its development. Some things are super cool and exciting and mostly work OK. Other things are held together with duct-tape and bubble gum. As soon as you apply any pressure most AI solutions fall apart. For a small business or solo code hacker like myself, turn key advanced AI solution are still not there yet.

I have some ideas. I am working on them and hope to bring some concepts to market soon. S3 storage and symbolic descriptive languages are working OK, but off-the-shelf consumer AI services cannot handle the requests before running out of tokens far too soon. There IS a better solution and one that does not require a degree in Artificial Intelligence to make it work. Some day we will all benefit from a simpler solution, until then these AI vendors will continue to try to force vendor lock in and develop proprietary systems with limited capabilities.

In the meantime I’ll continue using my Store Locator Plus project as an AI training ground as I work toward sharing knowledge and finding possible solutions.

Feature Image

AI Generated by Wan 2.5

via Galaxy.ai