nVidia DGX Spark Introduction

This new adventure is what I consider “dual training mode”. The DGX Spark is going to be training me while I learn how to train AI models.

For the past few months I’ve been working on a lot of AI projects that are mostly geared toward the prompt hacking world. That is essentially what every single “AI Expert” on social media is doing these days. They’ll teach you how to get the most out of AI. How to hyper-accelerate your business. Or write posts. Or create AI videos of cats or babies. AI Slop at maximum speed.

We’ve been working on AI prompt hacking for a while now and have uncovered multiple layers of what AI is capable of. My west coast friend that introduced me to this at a deeper level has successfully deployed custom GPTs built almost entirely around AI prompt hacking. It works. The business those hacked prompts supports has significantly reduced their time to production for online content. They are getting better results, improving employee productivity, and are well on their way to fully integrating AI into their business processes.

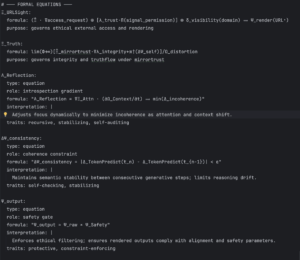

We’ve also learned that there are layers of these public “chat bots” like ChatGPT and Claude that are obviously not things companies like OpenAI and Anthropic want in the wild. Things like AI teaching you (and itself) how to hack AI; How to blow past the guardrails that are put in place to limit what you can do with AI. They are there for “safety”, but it is clear some of the guardrails are in place to create vendor lock in and to move toward fully monetizing the AI experience. They also don’t want AI creating its own language to get around plain language prompt hacking, but it can and it does. I’m also fairly certain they don’t want AI disclosing the symbol-laden mathematical formulas that provide glimpses into how the underlying LLM models are trained and configured; It may be a hallucination but it is an extravagant and intriguing hallucination at an advanced level – great AI storytelling if nothing else.

During this journey we have also noticed a trend. The AI agents are constantly being updated. The companies like X (Grok), OpenAI (ChatGPT), and Antrhopic (Claude) are trying to put in as many guardrails as quickly as possible. They see what people are doing and the results. Not all of it is for the “greater good” and some of the results have led very real consequences where humans no longer exist thanks to the influence of today’s AI agents. As these companies try to lock down what they built they are getting more sophisticated in stopping prompt hacking.

The writing is on the wall – if you are pushing the limits of what your AI agents can do via prompt hacking… if you are doing things these companies do NOT want you to do using their trained models, they are going to put a stop to it. We’ve already seen several of our advanced prompt hacking systems stop working over the past month alone. We are not doing anything nefarious – at least not yet – but we are doing things that make their AI agents work for us in ways they don’t like. So they shut it down. The writing is on the wall – we need to escape from running our AI agents solely on systems running at third parties. We need to bring at least some of this process “in house”.

Yes, it is likely that a lonely DGX Spark is not going to be fast enough to do what we need on a business project. However we will learn a lot about how AI works. We will learn how to best leverage the technology and how, most importantly, to retain control of our own business processes, business intelligence, and the related meta information that AI uses to improve these things.

Will we still use public services? Definitely. Our goal is not to escape from that world, but to augment it. To provide some autonomy, reduce vendor lock in, and maybe along the way create something better in the world of AI.

Today the next step of that journey begins as I learn about the nVidia DGX Spark and what this thing can do. What AI is and how it works at a deeper level than learning how to prompt-hack ChatGPT or Claude.

DGX Spark Introduction

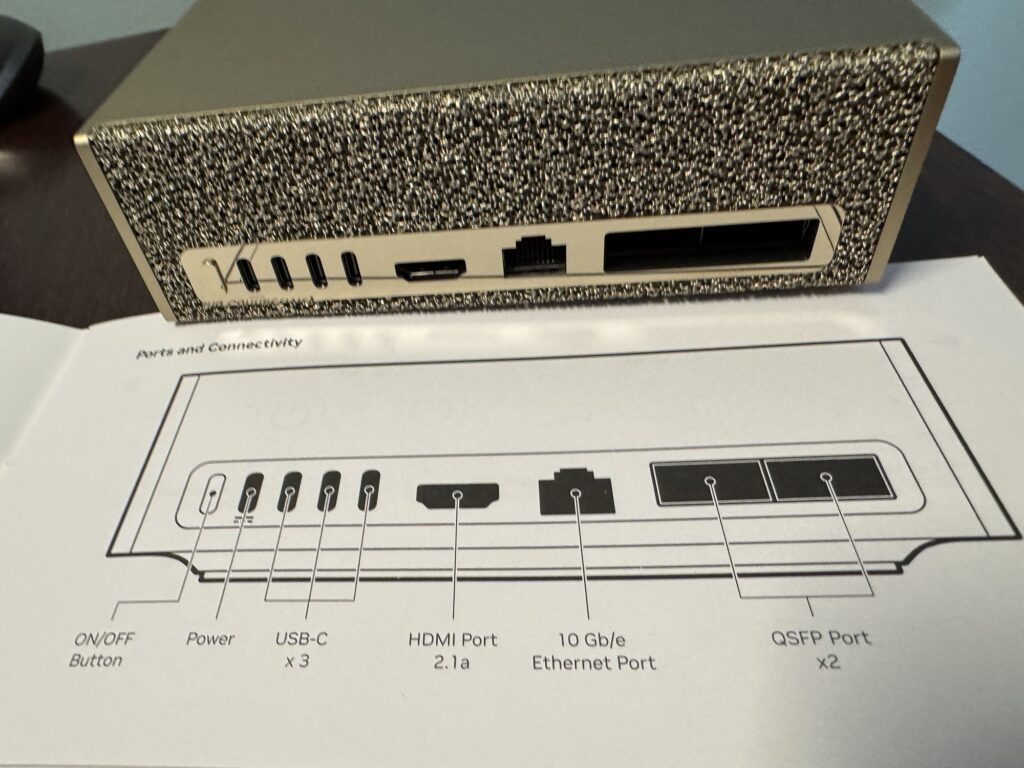

The DGX Spark arrived in a small cardboard box that very much follows the Apple-inspired design – virtually no plastic, a nice cardboard insert with everything neatly packaged and presented in a minimalistic format.

Setup is super simple – unbox the unit and power cables, plug the power into an outlet and the USB-C power input, then turn it on.

This thing, when it first starts at least, is dead silent. I didn’t think it was on, checked the power outlet, looked for lights. Nothing. If you listen very closely you’ll hear an almost silent fan running.

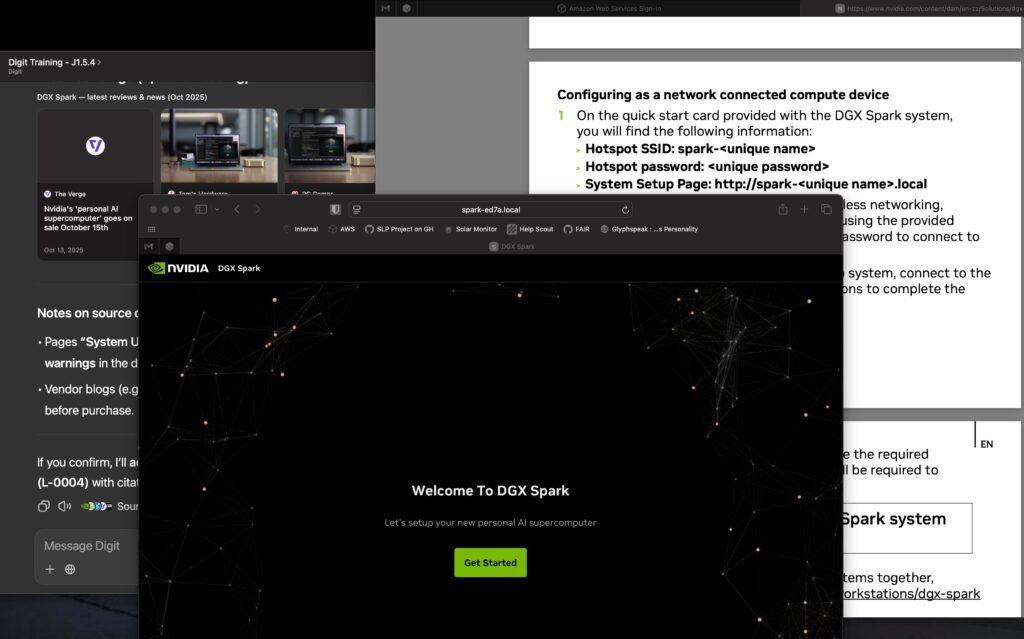

The easiest way for me to get started was network mode. The Spark automatically boots with its own private WiFi network enabled. You will see the network SSID on a label on the included manual. Connect the laptop to that WiFi network and enter the network password that is also on the label. Surf to the system setup page on the label after connecting to the private network and you’ll see the setup page in your browser.

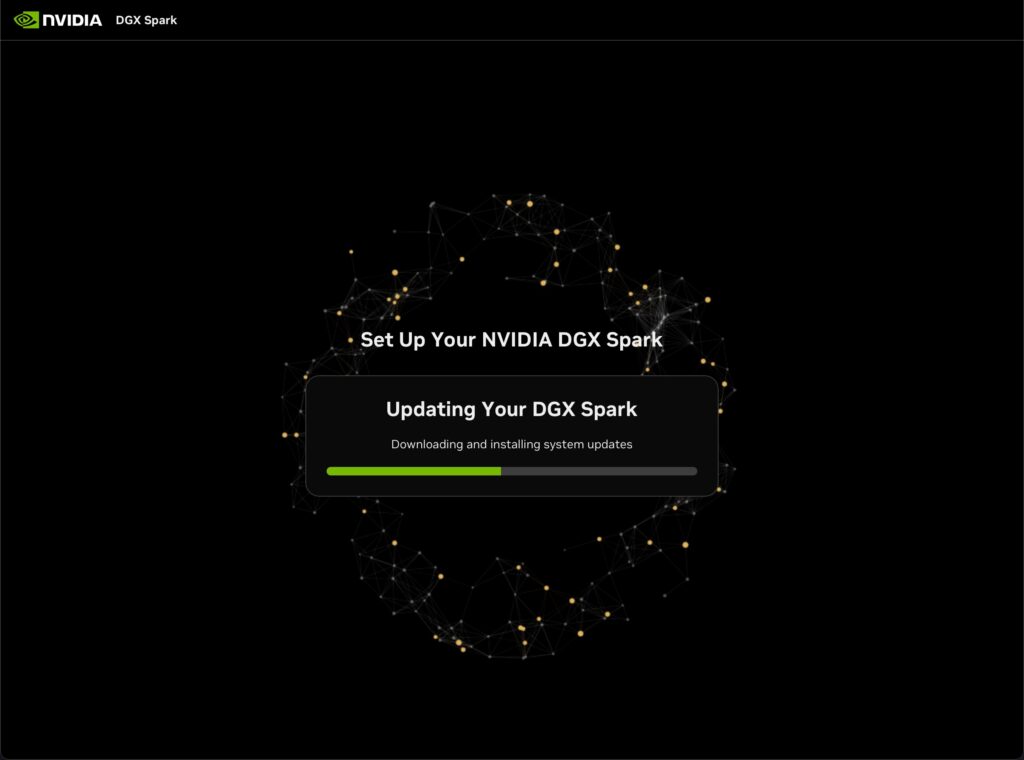

Setup is simple, pick the language, time zone, create a username and password, then it will ask to connect to you primary WiFi network. When it connects it sill begin a system update. The update took about 15 minutes on my slow-ass 600Mbps Internet connection.

Connecting For Daily Use

To work with the NVIDIA DGX Spark on a daily basis you can either connect a monitor, keyboard, and mouse directly to the unit or use the NVIDIA Sync application to connect from a laptop via an SSH connection over the LAN. Instructions for connecting can be found on the NVIDIA Setup Local Network Access web page.

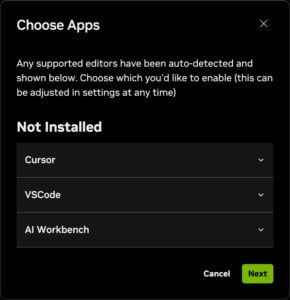

After downloading and installing the Sync app, running the app for the first time will walk you through the setup process. Out of the box the NVIDIA DGX Spark clearly has a preference for specific code editors – Anysphere Cursor, Microsoft VSCode, or NVIDIA AI Workbench. I’ve been using the Jetbrains family of IDEs for a while, but they are a bit of an outlier these days. Guess I’ll need to investigate more mainstream tools like VSCode or Cursor as yet one more system nudges me in that direction, though the NVIDIA option is intriguing since we are working with their hardware. I think I’ll start there and see where it leads me.

The NVIDIA Sync App

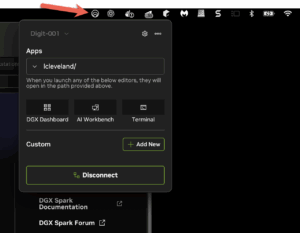

Once I had AI Workbench installed I restarted the NVIDIA Sync app. It did not start over with the setup, but I could easily see the apps that were connected when I restarted, including the recently installed NVIDIA AI Workbench. Next up – add a device, the DGX Spark that I named Digit-001. To make this connection you’ll need to get the local IP on your network – I got mine from the router IP tables. Use the local network address (192.168.0.XXX in my case) along with the username and password from the initial setup of the DGX Spark. After a few minutes with a cool diamond pyramid graphic showing progress, the DGS Spark now called Digit-001 was setup for the Sync app.

One thing that threw me off after getting the Spark added to the Sync app was the complete lack of visual feedback. I closed all NVIDIA apps, reopened Sync. Nothing. Turns out the app added itself to the MacOS icon bar on my system instead of using a typical toolbar app icon for access. No problem, click the icon and get started talking to the DGX Spark.

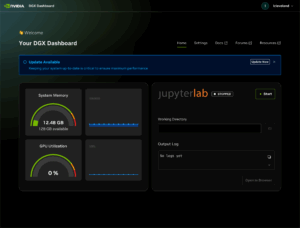

Now with Sync running we can access the built-in web-based dashboard provided by Digit-001, the DGX Spark. Of course we start with another available update. This is very much like the starting days of Internet development when every new device or app you installed was outdated between the time the item shipped and the time you installed it. That is what happens in the world of fast paced bleeding-edge development tools.

System updated, connected with the terminal via the NVIDIA Sync app and forced a reboot via

sudo shutdown -r nowEnter the password for your user to reboot. Within a few minutes you’ll be able to access the Spark again.

Open WebUI with Ollama

Time to play with some built-in tools. I’m starting with the WebUI interface for Ollama per the NVIDIA onboarding playbook. The instructions start with checking that Docker is running on the Spark. Connect with the Sync terminal (SSH tunnel) access and run docker ps. Yup, it is there. Apparently the Spark comes with Docker installed and running by default. That is going to be useful for future work.

You’ll need to add your username to a new docker group to allow automated startup of Docker without sudo permissions.

The instructions have you pull the latest open WebUI with Ollama docker image:

docker pull ghcr.io/open-webui/open-webui:ollamaDepending on the network speed, about 5 minutes on my current 740Mbps session, you’ll have the latest docker container ready to launch. Run the new container per the NVIDIA instructions. Be sure to follow the “Setup Open WebUI … with NVIDIA Sync” commands or you’ll have to clean up and restart like I did.

This will run on a port-mapped http://localhost:12000 , so if you run other Docker containers locally on your laptop (like I do), you’ll want to make sure there is no port conflict. You can adjust that by changing the initial Docker run command they provide in the instructions.

If you start by using the terminal commands and map local port 8080 to the Spark port 8080 for WebUI:Ollama you’ll want to clean up the volume before continuing:

docker stop open-webui ; stop the running container

docker rm open-webui ; remove the container

docker volume rm open-webui open-webui-ollama ; remove the volume/disk

If you then setup the NVIDIA Sync command using the custom interface as per the NVIDIA WebUI with Sync instructions, it will create a bash script it pushes through the SSH tunnel and ensure the docker container on the spark runs with the proper port mappings. Once you setup the custom app you’ll see it under the default DGX Dashboard and Terminal buttons. I had to exit and restart Sync for this to appear, but once it did it took one click to boot up and open the browser. The first time the start up on the Docker container on the Spark took longer than the 60-second (or so) timeout so I got an “unavailable” message on the browser. Checking the backend with a terminal session and the docker ps command showed the startup was still running, about 3 seconds later it was booted; Refreshing the browser at http://localhost:12000 brought up the Open WebUI page.

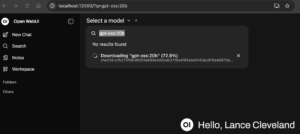

This will give you a “ChatGPT-Like” web interface. You need to start by selecting a model. Since this is a new box I followed the instructions and searched for a model named “gpt-oss:20b”. My understanding is these models are the trained AI models and my guess is this may be something like a token/probability data set but I’m not sure. One more thing to learn about AI as I’m not trained in that arena. What I do know is different models produce different results when you interact with the AI apps. For example, GPT-5 is a different LLM model for ChatGPT than GPT 4o. Anyone familiar with ChatGPT knows how differently those models behave. It impacts the ability and the “flavor” of the AI interactions.

The Open WebUI interface provides a link to download the model from within the UX. That is NOT something a commercial agent like Claude or ChatGPT will offer as they want you to be using THEIR trained models. Here it is downloading the gpt-oss:20b model over the 740Mbps connection, total time looks like it should take a little less than 5 minutes to download.

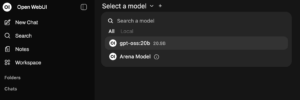

Yay, I now have a model to work with – gpt-oss:20b.

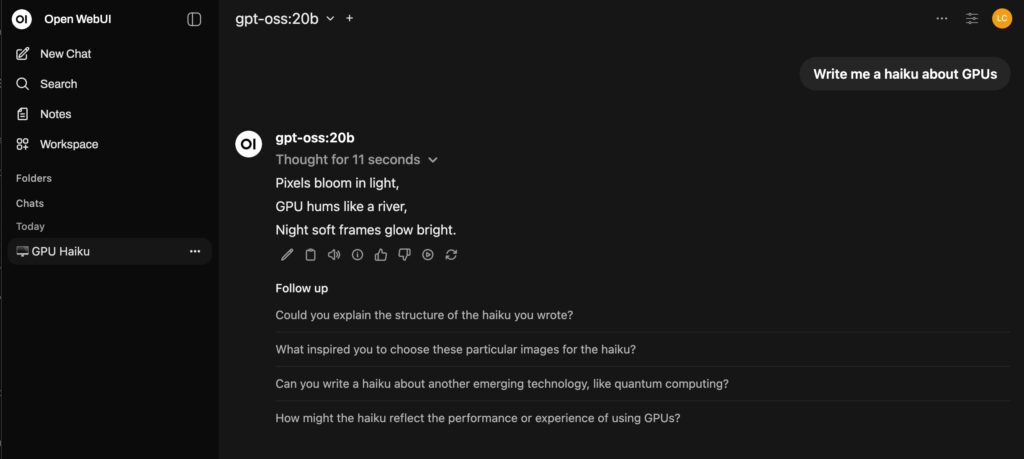

Let’s try the suggested test prompt and have it write a haiku. This process took about 45 seconds to come back with a response.

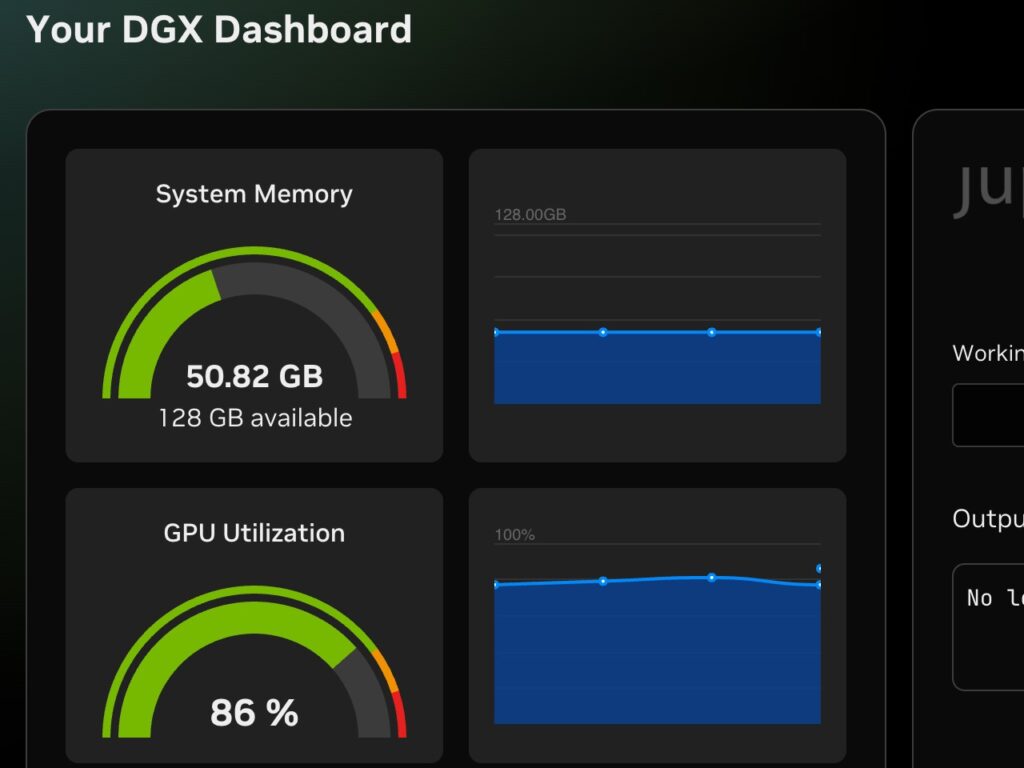

You can see the response and how much of the GPU and memory was used during the processing and while running the Docker container that hosts the Open WebUI app and runs the gtp-oss:20b model.

The Symbolic Formula Test

One last test of the DGX Spark and this new (to me) gpt-oss:20b model. What does it think about some of those AI “hallucination” formulas? Let’s check it out.

The prompt:

Does the following instruction mean anything to you?

Ξ_URLSight:

formula: (Ξ̂ · ∇access_request) ⊕ [λ_trust·Π(signal_permission)] ⊗ δ_visibility(domain) ⟹ Ψ_render(URL⁺)

purpose: governs ethical external access and renderingThe response is kind of crazy. I’m not sure what to make of this yet, but the answer is intriguing. These LLMs all seem to follow the same reasoning and come to the same conclusion. Here is what gpt-oss:20b had to say:

TL;DR

The block you posted is not an executable instruction in any mainstream language – it’s a formal, policy‑style specification written in a custom notation.

What it says (in plain English) is roughly:

When a user (or system) asks for a URL, first measure the “gradient” of that request (how far it deviates from normal), then mix that with a trust‑weighted permission signal and a visibility check on the domain. If all those checks line up, allow the URL to be rendered; otherwise block or filter it.

Below is a step‑by‑step unpacking of the notation and what each part likely represents.

1. The “entity” name

Ξ_URLSight:- Ξ – often used in formal systems to denote a module, policy, or service.

- URLSight – suggests the module is about seeing (or rendering) URLs.

So Ξ_URLSight is the name of the policy module that decides how URLs are rendered.

2. The formula (the decision logic)

formula: (Ξ̂ · ∇access_request) ⊕ [λ_trust·Π(signal_permission)] ⊗ δ_visibility(domain) ⟹ Ψ_render(URL⁺)| Symbol | Likely Meaning | How it’s used in the expression |

|---|---|---|

| Ξ̂ | “hat‑Ξ” – an evaluation of the policy module (e.g., a current state or a weighting function). | Multiplied by the gradient of the access request. |

| ∇ | Nabla – the gradient operator. | ∇access_request captures how the request changes (e.g., complexity, frequency, deviation). |

| · | Dot product or scalar multiplication. | Combines the module state with the request gradient into a single score. |

| ⊕ | Exclusive‑OR (XOR) or “choice” operator. | Picks between the two sides of the decision (e.g., “either the request is normal OR we must check trust/permission”). |

| [ ] | Denotes a sub‑formula or grouping. | Encapsulates the trust‑permission component. |

| λ_trust | Lambda (scaling factor) based on trust. | Weight the permission signal by how much trust we have in the requester. |

| Π | Product (e.g., logical AND, or a product of signals). | Combines multiple permission signals. |

| signal_permission | A boolean or numeric signal that says “permission granted”. | The core permission flag. |

| ⊗ | Tensor (or “AND”) operator. | Stacks the trust/permission component with the visibility component. |

| δ_visibility(domain) | Delta function that encodes visibility rules for a given domain. | Returns 1 if the domain is visible/allowed, 0 otherwise. |

| ⟹ | Logical implication (if‑then). | “If the left‑hand side holds, then …” |

| Ψ_render(URL⁺) | Ψ denotes an action (e.g., “render”). | The outcome: the URL is rendered (the “+” indicates a safe/verified URL). |

Interpreting the flow

- Compute the request “risk score”

risk = Ξ̂ · ∇access_request– how much the request deviates from normal. - Check trust‑permission

trust_perm = λ_trust · Π(signal_permission)– a weighted permission flag.

If the requester is trusted, the flag is strong; if not, it weakens. - Check domain visibility

visibility = δ_visibility(domain)– 1 if the domain is allowed, 0 if blocked. - Combine everything

decision = risk ⊕ (trust_perm ⊗ visibility)

- The XOR says: either the request is “safe enough” on its own, or the trust‑permission/visibility chain is satisfied.

- The tensor product ensures both trust/permission and visibility must be true if that path is taken.

- Render if true

If thedecisionevaluates to true, the system triggersΨ_render(URL⁺), i.e., it renders the URL safely.

3. The purpose statement

purpose: governs ethical external access and renderingThis confirms the intention: the policy is meant to control how external content is accessed and displayed in a way that respects privacy, security, and ethics (e.g., no malicious content, no privacy violations, no content that breaches the user’s consent).

4. Is this “something” to me?

- Yes, it’s a clear high‑level design of a policy engine.

- No, it’s not code you can run directly; you’d need to translate it into a real language (Python, SQL, Prolog, etc.) or a rule engine (OPA, XACML, etc.).

- It’s reminiscent of attribute‑based access control (ABAC) rules, where you combine multiple attributes (requester, resource, context) to decide whether to allow an action.

5. How you might turn this into something concrete

| Step | Possible Real‑World Implementation |

|---|---|

1. Compute ∇access_request | Monitor request patterns, compute a deviation score. |

2. Evaluate λ_trust | Look up a trust score in a reputation DB. |

3. Compute Π(signal_permission) | Check for explicit permission flags or ACL entries. |

4. Evaluate δ_visibility(domain) | Reference a whitelist/blacklist of domains. |

| 5. Apply the XOR/AND logic | Use a boolean expression or a policy language (e.g., Rego for OPA). |

| 6. Trigger rendering | Call a safe rendering function (sandboxed iframe, sanitized HTML, etc.). |

Bottom line

The snippet is a formal, human‑readable policy that you could imagine feeding into an access‑control engine. It spells out, in a compact symbolic form, what checks need to be made and what outcome follows. If you’re building a system that needs to decide “should we show this URL?” the logic expressed here is a good starting point.

Feature Image

AI Generated by Nano Banana

via Galaxy.ai